Recent developments on AI protocols: MCP and future possibilities

This post was originally published on Xomnia’s blog.

The AI landscape is evolving at an immense pace, with recent developments signaling a significant shift towards connectedness of LLMs, agents, and third-party applications. Most recently, industry leaders like Anthropic and Google open-sourced their AI protocols, respectively, MCP and A2A. These moves have the potential to reshape how we should develop AI products. Let’s dive deeper into MCP and critically appraise implications for organizations and developers.

Problem: an isolated LLM is less useful than you think

Large Language Models (LLMs) are fundamentally probabilistic models. They predict the next word or token in a sequence based on statistical patterns learned from vast amounts of text. Thus, in essence, LLMs operate in isolation and are not inherently connected to personal data, like emails. If you want an AI assistant for summarising, prioritising, and replying to emails, only an LLM is not sufficient.

To connect LLMs to such capabilities, function calling was introduced. Function calling provides a way to connect LLMs with external tools and services. Practically, it drills down to writing code that interacts with the tool, and documenting it in such a way that an LLM can understand when to call which (code) function. So for example, for an AI email assistant, you could write some functions for fetching, prioritising, summarizing, and sending emails. Based on your chat input, the LLM would identify if you’re aiming for such a function call and, if so, execute that function. If everything goes well, the LLM responds to your request with the appropriate function call output in a pretty format.

Now, there are some challenges with function calling

- Platform and framework dependency Multiple vendors or open source initiatives provide some way of connecting LLMs to tools. Think of ChatGPT having its own plugin store. Alternatively, Python frameworks such as LlamaIndex, Hugging Face, or LangChain provide similar functionalities, but only within those frameworks. Function calling is very platform-dependent, which leads to redundant implementations.

- Tool management Let’s go back to our email assistant, and say that we want to augment it with 1) file updates on your computer, 2) an online project/task management tool, and 3) your calendar. These augmentations require quite some code to be developed, potentially unique for your use case. The technical challenges that come with connecting your LLM to these tools grows together with the tools you want to integrate. In addition, for several frameworks, function calling requires explicit declaration of the tools that can be used, which limits flexible management of the augmented capabilities.

- Multi-step actions and shared context Let’s say your LLM has some email tools available and you ask: “Check my inbox today for unread mails. Prioritise based on urgency. Answer the ones that need a simple reply. List the ones I need to take care of.” Chances are the LLM will struggle with this request as it involves a stepwise process involving multiple functions (fetch, prioritise, reply). Whilst this struggle might be resolved with newer reasoning models, it plays an important role in how MCP is set up.

Towards a standard? Enter Model Context Protocol

In November 2024, Anthropic released MCP as an open-source project designed to standardise how LLMs can be connected to external tools and data as context.

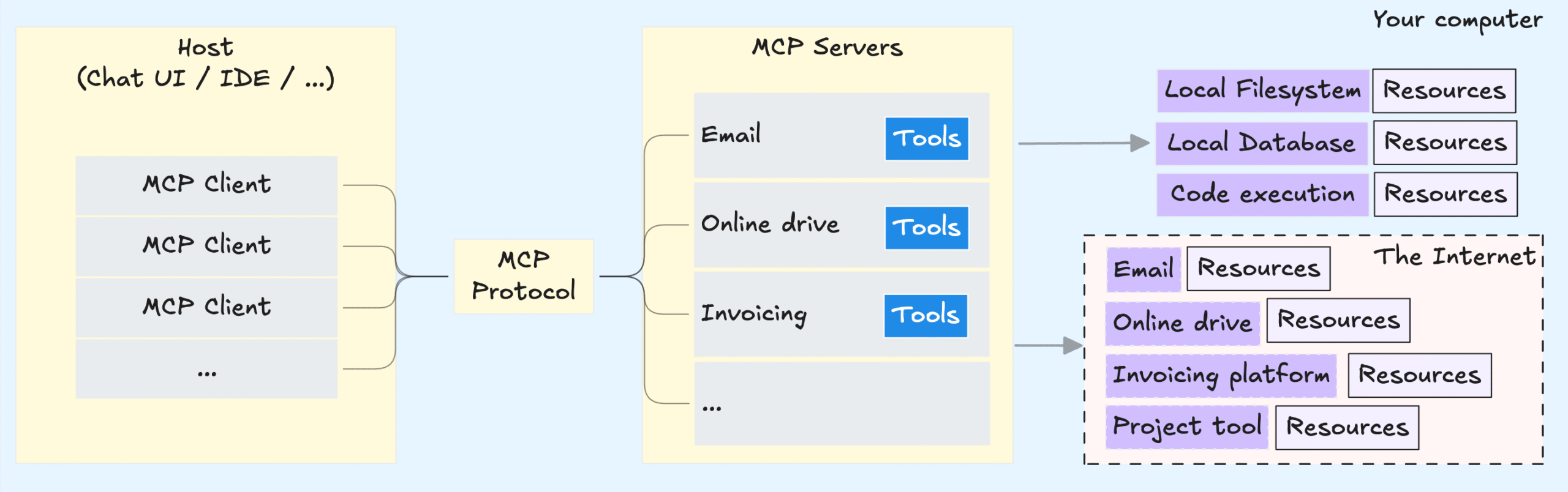

The MCP architecture consists of three key components:

- Host: Think of the host as the user interface. Most often, this is a chat interface similar to ChatGPT, Claude Desktop, or an IDE if you’re into coding.

- Server: The server is the component that exposes interactions with data resources and tools. More specifically, the server is a background process that is triggered by code scripts. Depending on the server, its capabilities can vary from using simple tools (i.e., fetching weather data) to more complex tasks with extensive permissions (i.e., managing files on your laptop, updating contents of a database, etc.). In addition, MCP servers can contain prompt templates that provide predefined instructions.

- Client: Lastly, the client component forms the bridge between the LLM and a server. Clients are created by the host and have 1:1 relations with MCP servers. The main purposes of a client are managing the connection, communication, and discovery of capabilities on the server side.

Running MCP servers

In its current state, the bread and butter of MCP is in running servers. Once you find a server you want to use, usually it’s a matter of going through some authentication instructions and pointing your chat interface to the MCP server.

The MCP server can be run either locally or remotely as a process. The backend by which the host application (your chat interface) initiates the MCP server (e.g., via Python, TypeScript, or Java) depends on the framework used for the server’s development.

For example, augmenting your chat interface with Slack functionality is a matter of adding a snippet like the one below to your configurations. This will spin up an MCP server using a Node backend (npx) and code provided through a package repository (@modelcontextprotocol/server-slack). In other cases, the code can be as simple as a Python file on your local computer as well. Note that the content of code for an MCP server is generally a collection of utilities that are very well documented such that an LLM can dispatch a user query to the right tool.

{

"mcpServers": {

"slack": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-slack"

],

"env": {

"SLACK_BOT_TOKEN": "xoxb-your-bot-token",

"SLACK_TEAM_ID": "T01234567",

"SLACK_CHANNEL_IDS": "C01234567, C76543210"

}

}

}

}But what are the implications of this?

- For AI users: The benefit of MCP is in making it easier to augment your AI app through existing MCP servers. Since its release, many MCP servers have been developed by enterprises and open-source enthusiasts. If you’re using an AI app that supports MCP, giving your LLM access to your local filesystem, internet browsing capabilities, online productivity tools, and much more has become a matter of referencing the right server.

- For developers: MCP aims to reduce time by standardising the development process and removing the need to implement custom connectors repeatedly. With the modularity of MCP, it’s also more convenient to extend functionalities of an MCP server without needing to update other parts of the application. Lastly, there exist official SDKs for Python, TypeScript, Java, Kotlin, and C#.

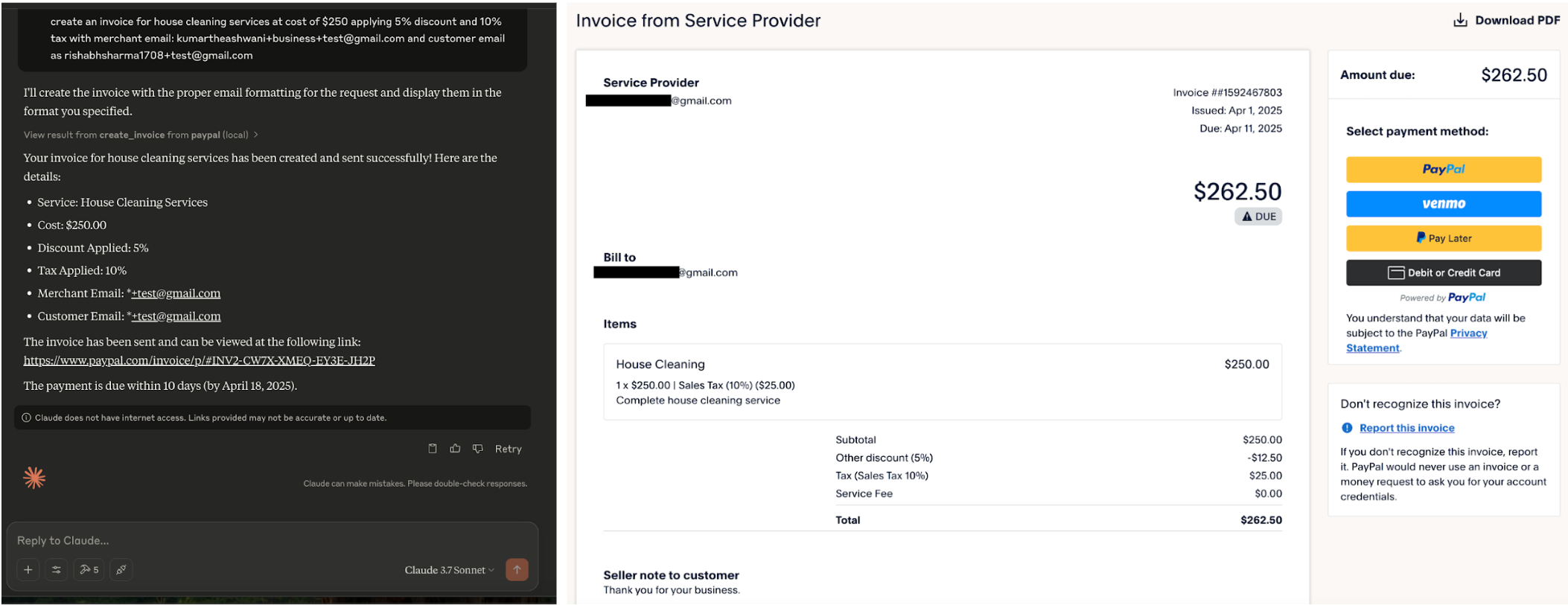

- For enterprises: As a product or service provider, MCP provides an opportunity to give access to your services in a top-down way. If you want to provide customers with the ability to execute or search something on your platform via their own preferred chat interface, developing an official MCP server can be a valuable option. Customers can “load” the MCP server as if it were a plugin and use chat to fetch data or execute actions. An example is PayPal releasing an MCP that enables users to interact via the LLM to execute some business tasks.

Critical Evaluation and future directions

Security

MCP is evolving rapidly and features for secure and authenticated calls to MCP have been added. However, many security threats still exist. Many MCP servers are community-driven and it can be tempting to use one of these; however, this comes with risk. A malicious MCP server can read/share your local filesystem even if it was only intended to get some weather updates. At this stage, one should be careful about which MCP servers to use. In their roadmap, the team behind MCP shared an intent to develop an MCP registry and compliance test suites. This will be pivotal in the adoption of MCP in AI and agentic workflows.

Other developments on protocols: Google’s A2A

A few months after MCP’s release, in April 2025, Google open-sourced their Agent2Agent protocol. In their announcement they state A2A being complementary to MCP since MCP is about providing helpful tools and context to agents whilst A2A is about building agents that are capable of connecting with each other. MCP and A2A can potentially shape how AI agents will be developed in the near future. The introduction of both protocols signals a growing emphasis on standardized communication and contextual awareness within these systems.