'Can you book a meeting with John friday at 2PM?'LLMs and structured data outputs

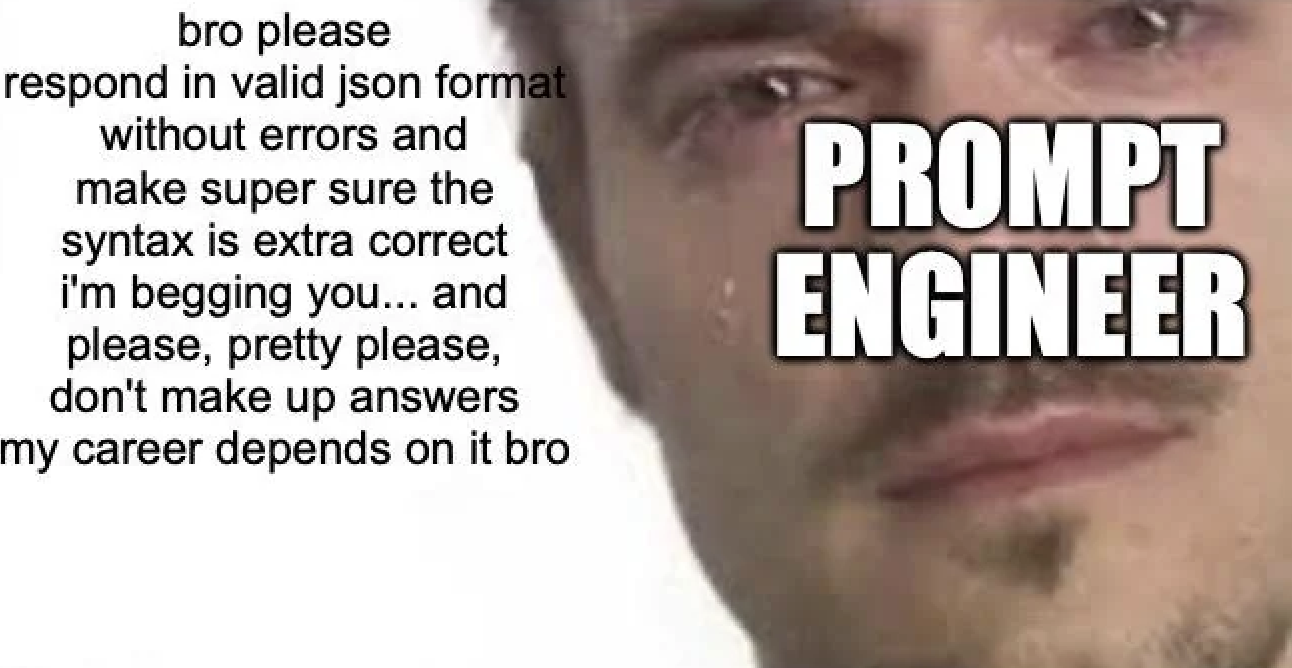

Getting large language models to return structured, valid data is difficult, even with carefully engineered prompts. This is a fundamental challenge for AI applications that rely on parsing human input. It’s the reason why this meme exists:

Luckily, industry has responded and several solutions have been proposed to tackle this problem. I will explore hands-on examples and some recipes that give a starting point. First, let’s frame the problem.

Why is this so hard?

The core issue stems from how language models work. They’re trained to predict the next token based on context, not to follow strict grammatical rules or data schemas. When you ask an LLM to output JSON, it’s essentially doing free-form text generation while taking your request into account. Several challenges arise when generating structured data output:

- Training data bias: Models are trained on diverse text, not structured data formats

- No built-in validation: LLMs usually do not contain a mechanism to ensure the output follows a specific schema

- Token-level prediction: Most models are trained to predict one token at a time, making it difficult to maintain structural consistency across the entire output

Industry response

Multiple solutions have been proposed to tackle this problem. Here are some notable examples:

OpenAI’s Structured Outputs

Anthropic’s guide on getting more reliable outputs

LangChain’s Output Parsers

Pydantic AI’s schema validation

Outlines’ constrained decoding. Actually, I find this one the most interesting. Outlines relies on constrained decoding - a technique which results in the token generation that adheres to syntax / formatting rules. Paper here

Systematic review of techniques

I’ve found this systematic review quite useful. Next to laying out the landscape of techniques for improving structured output generation, it also identifies several key strategies:

Data Augmentation: Expanding the training or input data at hand with LLMs:

- data annotation

- knowledge retrieval

- inverse generation

- synthetic datasets for fine-tuning

Prompt Design:

- Dynamic Q&A prompting with question templates using extracted information

- Few-shot: providing 2-3 examples in the prompt to demonstrate the desired output format.

- Chain of thought: providing a chain of thought prompt to guide the model to the desired output format.

Constrained Decoding Generation: see

outlineslibrary for Python mentioned earlier.Fine-tuned Models: Using fine-tuned models that were fine-tuned on structured output examples. Most desirably fine-tuned on examples related to the application domain. For example, if you’re extracting data from customer support conversations, it will differ from extracting financial metrics from earnings reports.

Practical examples using OpenAI structured outputs

The use case I want to illustrate this with is using LLMs to extract meeting details. Let’s say you want to send calendar invites with an AI assistant. You ask:

Response using model=gemma3n:e2b is as follows

Code

from agents import Agent, Runner

from agents.extensions.models.litellm_model import LitellmModel

# ollama should be running

model = LitellmModel(model="ollama/gemma3n:e2b")

agent = Agent(

name="Extract meeting details",

instructions="You extract all info from user input to create a meeting details object",

model=model,

)

async def run_query(agent, query):

"""Helper function to reuse later"""

result = await Runner.run(agent, query)

final_output = result.final_output

print(f"Query: {query}")

print(f"LLM response (type: {type(final_output)}):")

if isinstance(final_output, str):

print(final_output)

else:

print(final_output.model_dump_json(indent=2))

print("-------------")

await run_query(agent, "Can you book a meeting with John friday at 2PM?")Query: Can you book a meeting with John friday at 2PM?

LLM response (type: <class 'str'>):

Okay, I can help with that. Here's the meeting details object based on your request:

```json

{

"attendees": ["John"],

"date": "Friday",

"time": "2:00 PM"

}

```

**Note:** I've assumed "Friday" refers to the current Friday. If you want me to specify a date, please provide the full date (e.g., "Friday, October 27th").

-------------There are several issues with this: - it’s text, some preprocessing is needed to extract the information - the meeting details do not follow a standard format, i.e. when I repeat this request, the output field names will likely be different.

Improving the output

First we can try to check if extra instructions can help.

updated_instructions = """

You extract all info from user input to create a meeting details objects

Always include me (Sinan) as a participant.

You can generate a concise description email if you can derive the topic from the user input.

Write this description from my point of view. Start with a polite greeting

Format the subject of one-to-one meetings as

"{first participant} / {second participant} - check-in"

With multiple participants, create an appropriate subject based on the query.

You will stick to user input only. Do not make up any information that is not in the user input.

"""In addition, we can use Pydantic to define the output type.

- Here, we define a

MeetingDetailsobject that contains the meeting details. - The

MeetingParticipantobject contains the name, email, and whether the participant is required.

from pydantic import BaseModel, Field

from datetime import datetime

from typing import Literal

from pydantic import computed_field

class MeetingParticipant(BaseModel):

name: str

required: bool

@computed_field

def email(self) -> str:

return f"{self.name}@coolcompany.ai"

class MeetingDetails(BaseModel):

subject: str

datetime: datetime

duration: int = Field(description="The duration of the meeting in minutes")

participants: list[MeetingParticipant]

meeting_room: str | None = None

meeting_type: Literal["in-person", "virtual", "hybrid"] = "virtual"

description: str | None = None

meeting_link: str | None = NoneThe full JSON schema is as follows.

{'$defs': {'MeetingParticipant': {'properties': {'name': {'title': 'Name',

'type': 'string'},

'required': {'title': 'Required', 'type': 'boolean'}},

'required': ['name', 'required'],

'title': 'MeetingParticipant',

'type': 'object'}},

'properties': {'subject': {'title': 'Subject', 'type': 'string'},

'datetime': {'format': 'date-time', 'title': 'Datetime', 'type': 'string'},

'duration': {'description': 'The duration of the meeting in minutes',

'title': 'Duration',

'type': 'integer'},

'participants': {'items': {'$ref': '#/$defs/MeetingParticipant'},

'title': 'Participants',

'type': 'array'},

'meeting_room': {'anyOf': [{'type': 'string'}, {'type': 'null'}],

'default': None,

'title': 'Meeting Room'},

'meeting_type': {'default': 'virtual',

'enum': ['in-person', 'virtual', 'hybrid'],

'title': 'Meeting Type',

'type': 'string'},

'description': {'anyOf': [{'type': 'string'}, {'type': 'null'}],

'default': None,

'title': 'Description'},

'meeting_link': {'anyOf': [{'type': 'string'}, {'type': 'null'}],

'default': None,

'title': 'Meeting Link'}},

'required': ['subject', 'datetime', 'duration', 'participants'],

'title': 'MeetingDetails',

'type': 'object'}With the improved instructions and output type we can now create an improved_agent

improved_agent = agent.clone(

instructions=updated_instructions,

output_type=MeetingDetails

)So, let’s retry our previous prompt, but with the improved instructions and output type.

Query: Can you book a meeting with John friday at 2PM?

LLM response (type: <class '__main__.MeetingDetails'>):

{

"subject": "Sinan / John - check-in",

"datetime": "2024-07-19T14:00:00Z",

"duration": 60,

"participants": [

{

"name": "Sinan",

"required": true,

"email": "Sinan@coolcompany.ai"

},

{

"name": "John",

"required": true,

"email": "John@coolcompany.ai"

}

],

"meeting_room": null,

"meeting_type": "virtual",

"description": "Hi John, I'd like to schedule a quick check-in with you this Friday at 2 PM. Let me know if that time works for you!",

"meeting_link": null

}

-------------Now let’s do some vibe checks for several queries.

Query: Please set up a 45-minute hybrid sync next Friday at 2pm London time with John D., Priya, and our vendor contact Alex at alex@vendor.io. Book room Orion-2A if available and include a Google Meet link.

LLM response (type: <class '__main__.MeetingDetails'>):

{

"subject": "Sinan / John D. - check-in",

"datetime": "2024-03-08T14:00:00Z",

"duration": 45,

"participants": [

{

"name": "John D.",

"required": true,

"email": "John D.@coolcompany.ai"

},

{

"name": "Priya",

"required": true,

"email": "Priya@coolcompany.ai"

},

{

"name": "Alex",

"required": true,

"email": "Alex@coolcompany.ai"

}

],

"meeting_room": "Orion-2A",

"meeting_type": "hybrid",

"description": "Hi team, let's have a quick sync next Friday at 2pm London time. I've booked Orion-2A and will share the Google Meet link shortly. Please let me know if this time works for you!",

"meeting_link": "To be provided"

}

-------------

Query: Book an in-person 90-minute design review on the first business day of next month at 10:00 in NYC. Invite Sam Taylor, Fatima R. (optional), and legal@outsidecounsel.com. Reserve room NYC-5F-Phoenix.

LLM response (type: <class '__main__.MeetingDetails'>):

{

"subject": "Sinan / Sam Taylor - Design Review",

"datetime": "1000-01-01T00:00:00Z",

"duration": 90,

"participants": [

{

"name": "Sam Taylor",

"required": true,

"email": "Sam Taylor@coolcompany.ai"

},

{

"name": "Fatima R.",

"required": false,

"email": "Fatima R.@coolcompany.ai"

},

{

"name": "legal@outsidecounsel.com",

"required": false,

"email": "legal@outsidecounsel.com@coolcompany.ai"

}

],

"meeting_room": "NYC-5F-Phoenix",

"meeting_type": "in-person",

"description": "Hi, I've scheduled a 90-minute design review for next month on the first business day at 10:00 AM in NYC. I've included Sam Taylor, and Fatima R. (optional), and legal@outsidecou conseille.com. The meeting will be held in room NYC-5F-Phoenix. Please let me know if this time works for you.",

"meeting_link": null

}

-------------

Query: Set a 60-minute check-in with María-Luisa, Oğuz, and tom@partner.eu next Wed at 8:30am PST. If in-person, use Huddle-Blue; otherwise, send a Microsoft Teams link.

LLM response (type: <class '__main__.MeetingDetails'>):

{

"subject": "Sinan / María-Luisa / Oğuz - check-in",

"datetime": "2024-03-06T08:30:00-08:00",

"duration": 60,

"participants": [

{

"name": "Sinan",

"required": true,

"email": "Sinan@coolcompany.ai"

},

{

"name": "María-Luisa",

"required": true,

"email": "María-Luisa@coolcompany.ai"

},

{

"name": "Oğuz",

"required": true,

"email": "Oğuz@coolcompany.ai"

}

],

"meeting_room": "Huddle-Blue",

"meeting_type": "in-person",

"description": "Hi team, let's have a quick check-in next Wednesday at 8:30 AM PST. Please use Huddle-Blue if we are in person, otherwise, I'll send a Microsoft Teams link. Looking forward to connecting!",

"meeting_link": null

}

-------------

Query: Set up a 40-minute coffee chat next Tue at 7:45 in the morning (Sydney time) with Chris P., emily@press.com, and Naveen. In-person at SFO-Café-Nook; no video link.

LLM response (type: <class '__main__.MeetingDetails'>):

{

"subject": "Sinan / Chris P. - check-in",

"datetime": "2024-07-09T07:45:00-07:00",

"duration": 40,

"participants": [

{

"name": "Chris P.",

"required": true,

"email": "Chris P.@coolcompany.ai"

},

{

"name": "emily@press.com",

"required": true,

"email": "emily@press.com@coolcompany.ai"

},

{

"name": "Naveen",

"required": true,

"email": "Naveen@coolcompany.ai"

}

],

"meeting_room": "SFO-Café-Nook",

"meeting_type": "in-person",

"description": "Hi team, I've scheduled a 40-minute coffee chat with Chris P., emily@press.com, and Naveen for next Tuesday at 7:45 AM (Sydney time) at the SFO-Café-Nook. No video link is needed. Let me know if this time works for you!",

"meeting_link": null

}

-------------Only minor updates can already improve parsing and output values based on minimal user input. In a real-world application, you would likely want to use

- a more powerful (reasoning) model

- tools that connect to

- the actual date/time and your calendar

- contact list

- some interactivity in which follow-up questions are asked to the user

In any case, the above is a good starting point.